Pushing the Limits of Robotics

Robots have been working behind the scenes in manufacturing for years. Now, researchers are focusing on the technology that could imbue robots with capabilities to interact with people and employ skills in the everyday world. That could mean a future where robots work side by side with people, take over mundane tasks at home, do dangerous work like firefighting and disaster response, and help us advance science and improve the human condition.

Critical to this next juncture in advanced robotics is a collaborative research effort. Engineers are not only thinking about our future with robots, but also aiming to solve the problems that are top of mind in robotics: how to make robots that are able to understand and respond to the environment; how to give them the capability to connect with each other and solve problems; and how to make their parts more humanlike, with the adaptability and flexibility we typically take for granted.

Hod Lipson's Creative Machine Lab is pushing the frontiers of 3d food printing and accessing a new food space. The team includes a robotics class, an engineering and industrial design team and collaboration with chefs at International Culinary Center.

How to Make Robots Curious and Creative

There is no doubt that, in the future, robots will have a higher profile in everyday life than they do now. What intrigues roboticists, though, is developing the technology so that tomorrow’s robots will act and respond more like humans; to, in effect, be self-aware enough to be resilient and adaptive.

“Our ultimate goal is to create curious and creative machines: robots that ask questions, propose ideas, design solutions, and create those solutions, including other robots,” explains Hod Lipson, professor of mechanical engineering at Columbia Engineering and director of the Creative Machines Lab. “Creating technology with self-awareness and consciousness is the ultimate challenge in engineering.”

And, highly futuristic. Imagine a robot that can self-replicate or self-reflect. For Lipson and his lab, the collaborative nature of academic research is proving to be the perfect birthplace for that genesis.

“In academia we have the rare and precious license to work on very long-term goals. We can explore wild ideas that will only bear fruit decades from now.”

Lipson’s “wild ideas,” which include machine self-replication and programmable self-assembly, may not be so futuristic. Years ago, he took the concept of 3D printing to a new dimension, envisioning robotic printers that can create customized food. And while 3D printers that create pastries and confections are already in the marketplace, Lipson sees the technology expanding to create foods based on an individual’s biometrics, embedding in food nutrients that are specific to the consumer, and then cooking it.

“Bringing software and robotics into something as basic as food prep and cooking means we open access to a new food space,” he says. “With robotics we can control nutrition and create novel foods you can’t make any other way.”

To push the idea of advanced robotic systems further, Lipson looks to natural biologic processes for inspiration.

“I’m fascinated by robots as an imitation of life—both body and brain,” he says. “Can we make robots that ask questions, propose ideas, design solutions, and make them—including other robots? That’s an exciting challenge.”

Taking cues from evolution, Lipson leverages inspiration from biological processes to program robots to simulate themselves, thereby getting closer to the day when robots are self-aware and demonstrate curiosity and creativity.

“To get there, we have to understand the biological process of thinking and reasoning. What we learn not only forwards the evolution of robots, but also brings new insight to the complexity of natural systems and engineering design automation,” he says.

That insight will also bring humankind one step closer to answering an age-old question, he surmises. “If we can create self-awareness in machines, we will begin to understand life itself.”

Hod Lipson is a member of the Data Science Institute.

Hod Lipson

Lipson’s 3D printer prototype

Designing Robots to Help Overcome Effects of Neurological Disorders

Many children are fascinated by robots, and those who recently tried out a new robotic technology in Sunil Agrawal’s lab are no different. But these children have cerebral palsy, and the robotic technology they tested helped improve their balance and mobility.

Because cerebral palsy impairs muscle tone, it can cause some children to toe walk, a condition that affects quality of life and adds to the risk of falling and sustaining serious injury. Agrawal’s Tethered Pelvic Assist Device (TPAD), a cable-driven robotic system, applies downward forces to a child’s pelvis to improve impaired walking patterns after their training with the device.

“The children underwent 15 sessions of training over six weeks with our TPAD device and consistently showed improved force interaction with the ground that enabled them to walk more stably at a faster speed and with a larger step length,” says Agrawal, professor of mechanical engineering at the Engineering School and director of the Robotics and Rehabilitation (ROAR) Laboratory and Robotic Systems Engineering (ROSE) Laboratory. He recently received the Machine Design Award from the American Society of Mechanical Engineers for his innovative contributions to robotic exoskeletons design.

Agrawal focuses his work on rehabilitative medicine, developing robotic devices and interfaces that help adults and children move better or retrain their bodies to recover their lost function.

“In our work, we use robotics to help enhance human performance by melding machine action with the human body,” he says. To accomplish that, he takes a collaborative approach to the designs of these robotic devices, working with physicians from Columbia University Medical Center as well as with researchers in mechanical engineering and robotics.

“Our robotics research requires a close interaction with clinicians, therapists, and medical professionals,” he explains. “We help them learn what our technology can provide and vice versa.”

By doing so, Agrawal is able to offer functional solutions using wearable rehabilitative robotics. His designs are lightweight, durable, easy to wear, energy efficient, and able to interface well with their wearers. He is currently starting work on a new, five-year, $5 million grant from the New York State Department of Health on further TPAD research.

Aside from the TPAD, Agrawal has other rehabilitation robotic technologies that are undergoing human testing. He has designed a robotic scoliosis brace to offer a novel platform for pediatric orthopedists. And he has just begun human testing of a robotic neck brace that is designed to treat patients with head drop (common in people with ALS) or the tremors experienced by people with cervical dystonia.

Key to the success of Agrawal’s assistive robotic technology is a deep desire to make this technology meet the personal needs of people. “Our designs are novel in the field of rehabilitation robotics and reflect the needs of patients,” he says. With this technology, he is providing the fundamental and applied knowledge necessary to advance human-robot interaction.

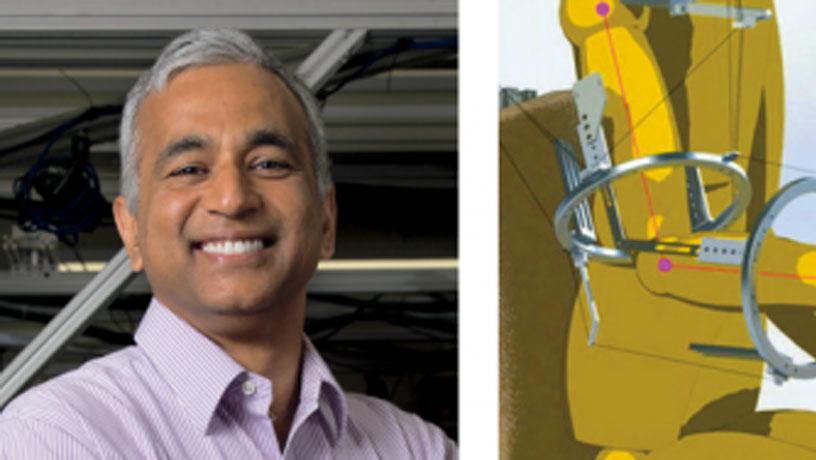

Left: Sunil Agrawal; right: A schematic drawing of a subject holding a laparoscopic tool.

Making Robots Capable of Interacting with People

Science is enabling robots to be more helpful, from delivering room service to helping with housekeeping chores at home. But for robots to be truly effective assistants, a new dimension of shared autonomy must be explored, where robots can better interact with humans and learn to adapt to new situations.

“Current technology is catching up with human need, but robots do not yet have the ability to understand complex human behavior such as nuance, emotion, and intent,” says Peter Allen, professor of computer science at Columbia Engineering. “Building these capabilities into robots is quite challenging and involves learning and understanding about humans as well as being able to write software that is up to this challenge.”

Allen and his research team are exploring the idea of shared autonomy in their quest to make robots more effective in helping people with severe motor impairments. For these people, even using a joystick or a keyboard to control an assistive device is difficult to nearly impossible. Instead, Allen and his team have shown how a special sensor placed behind the ear can connect a person’s desire for an action with that action being done by a robot. “This is science that has real meaning in people’s lives,” he says.

In trials, a test subject fitted with the sensor used his ear muscles to move a cursor on a computer screen, which, in turn, resulted in the robot picking up different objects using different types of grasps.

“Even more amazing, the subject was at our collaborator Sanjay Joshi’s lab at UC Davis, and the objects were grasped and picked up in our lab at Columbia. We set up a virtual reality interface, so the subject could virtually see the robot and the choices for grasps and then select one he liked or actually choose his own grasping strategy,” says Allen. “We learned if you can off-load some of the task on the robot’s own intelligence, you can simplify the interface and have the human inject just enough of a control signal to get the job done.”

Because humans have such a highly developed control system in the brain, it makes sense to Allen to find a way to harness that power in order to make it easier for a person to guide and control a robot.

“We are now exploring the newly emerging area of Brain-Computer Interfaces (BCI) for communication and information exchange between humans and robots,” says Allen. “This has promise to really improve human-robot interaction. By experimenting with a novel suite of noninvasive sensors, we are determined to develop techniques to recognize situational state, or user awareness. This will also allow the robot and human to actually learn about each other’s state and expectations.”

Grasping with your brain in Peter Allen’s robotics laboratory, where a subject can interface with a robotic arm through EEG brain signals to perform complex grasping tasks.

Assistive Technology Leads to Autonomous Robotics

Before robots become fully autonomous, they will need to have the ability to physically manage seemingly small but very complex tasks. For instance, there’s no robot now capable of the intricate movements the human hand uses to accomplish tasks.

“Dexterous, versatile manipulation is one of the big unsolved problems in robotics,” says Matei Ciocarlie, assistant professor of mechanical engineering at the Engineering School. “Today’s robots on assembly or manufacturing lines perform the same task again and again, grasping the same parts as they come down the line. The world where humans interact is not like that. There is a wide range of objects of all shapes and sizes that people effortlessly manipulate.”

No one knows that scenario better than a person who has had a stroke and can no longer effectively grasp objects. Ciocarlie, along with Joel Stein, chair of the Department of Rehabilitation and Regenerative Medicine at Columbia University Medical Center, has cocreated MyHand, a glovelike orthosis, to help stroke survivors regain hand function. By creating this portable, lightweight glove, the researchers have not only been able to help the person wearing it manage grasping and other hand movement; they have gotten closer to the goal of developing autonomous robots.

“Manipulation is an enormously complex skill, testing the ‘hardware’ (the hand) and the ‘software’ (the brain) in equal measure,” explains Ciocarlie. “Autonomous robots cannot yet approach humanlike performance, but, when controlled by the human user making the high-level decisions, a wearable device could enable the user to perform tasks that a standalone robot could not.”

Ciocarlie, Stein, and their team have been working with stroke patients for more than a year, testing the MyHand device, collecting patient feedback, adjusting the device, and testing again.

“We’ve gone from simple movement to testing pick-and-place tasks, and, quite recently, we have begun experimenting with methods that allow the users themselves to control the device,” he says. “The patients and the research physical therapists we have been lucky enough to work with give us very objective and practical assessments and don’t hold back when criticism is warranted.”

Ciocarlie often receives important encouragement from the patients he works with. “In most cases, as we wrap up a test, the user will tell us something along the lines of, ‘You know, overall, it’s so much better than last time.’ That’s what we like to hear,” he adds.

Even more encouraging are the most recent tests, when, for a moment, a patient is so absorbed in conversation with Ciocarlie and the research team that he or she simultaneously operates MyHand without thinking about it. “That’s where we’d like to get to: an assistive device you don’t have to think about, as it just enables you to do what you want,” he says.

Curious about the programs and initiatives at Columbia focused on robotics? Learn more at robotics.columbia.edu.

Above, right: Prototype of a wearable hand orthosis, developed by Matei Ciocarlie’s lab in collaboration with Dr. Joel Stein (Columbia University Medical Center), used to assess the level of force needed to assist hand extension in stroke patients; above, top: Matei Ciocarlie; bottom: Ciocarlie works with students in the Robotic Manipulation and Mobility Lab.