Artificial intelligence can design an autonomous robot in 30 seconds flat on a laptop or smartphone.

It’s not quite time to panic about just anybody being able to create the Terminator while waiting at the bus stop: as reported in a recent study, the robots are simple machines that scoot along in straight lines without doing more complex tasks. (Intriguingly, however, they always seem to develop legs rather than an arrangement that involves wiggling, moving like an inch worm or slithering.) But with more work, the method could democratize robot design, says study author Sam Kriegman, a computer scientist and engineer at Northwestern University.

“When only large companies, governments and large academic institutions have enough computational power [to design with artificial intelligence], it really limits the diversity of the questions being asked,” Kriegman says. “Increasing the accessibility of these tools is something that’s really exciting.”

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

AI can now write essays and drive cars, so design might seem like a logical next step. But it’s not easy to create an algorithm that can effectively engineer a real-world product, says Hod Lipson, a roboticist at Columbia University, who was not involved in the research. “Many questions remain,” Lipson says of the new study, “but I think it’s a huge step forward.”

The method uses a version of simulated evolution to create robots that can do a specific task—in this case, forward locomotion. Previously, creating evolved robots involved generating random variations, testing them, refining the best performers with new variations and testing those versions again. That requires a lot of computing power, Kriegman says.

He and his colleagues instead turned to a method called gradient descent, which is more like directed evolution. The process starts with a randomly generated body design for the robot, but it differs from random evolution by giving the algorithm the ability to gauge how well a given body plan will perform, compared with the ideal. For each iteration, the AI can home in on the pathways most likely to lead to success. “We provided the [algorithm] a way to see if a mutation would be good or bad,” Kriegman says.

In their computer simulations, the researchers started their robots as random shapes, gave the AI the target of developing terrestrial locomotion and then set the nascent bots loose in a virtual environment to evolve. It took just 10 simulations and a matter of seconds to reach an optimal state. From the original, nonmoving body plan, the robots were able to start moving at up to 0.5 body length per second, about half of the average human walking speed, the researchers reported on October 3 in the Proceedings of the National Academy of Sciences USA. The robots also consistently evolved legs and started walking, the team found. It was impressive that with just a few iterations, the AI could build something functional from a random form, Lipson says.

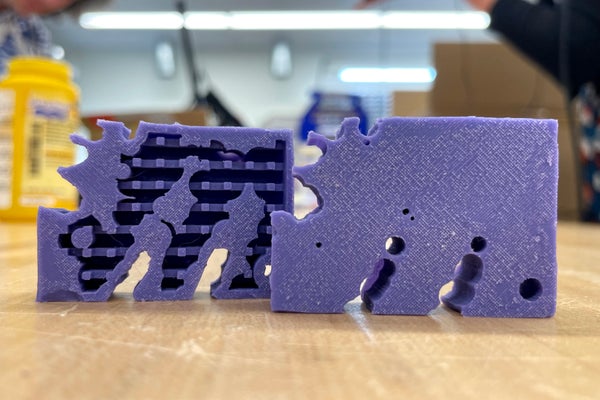

To see if the simulations worked in practice, the researchers built examples of their best-performing robot by 3-D printing a mold of the design and filling it with silicone. They pumped air into small voids in the shape to simulate muscles contracting and expanding. The resulting robots, each about the size of a bar of soap, crept along like blocky little cartoon characters.

An AI designed this little walking robot. Credit: Northwestern University

“We’re really excited about it just moving in the right direction and moving at all,” Kriegman says, because AI-simulated robots don’t necessarily translate into the real world.

The research represents a step toward more advanced robot design, even though the robots are quite simple and can complete only one task, says N. Katherine Hayles, a professor emerita at Duke University and a research professor at the University of California, Los Angeles. She is also author of How We Became Posthuman: Virtual Bodies in Cybernetics, Literature, and Informatics (University of Chicago Press, 1999). The gradient descent method is already well-established in designing artificial neural networks, or neural nets—approaches to AI inspired by the human brain—so it would be powerful to put brains and bodies together, she says.

“The real breakthrough here, in my opinion, is going to be when you take the gradient descent methods to evolve neural nets and connect them up with an evolvable body,” Hayles says. The two can then coevolve, as happens in living organisms.

AI that can design new products could get humans unstuck from a variety of pernicious problems, Lipson says, from designing the next-generation batteries that could help ameliorate climate change to finding new antibiotics and medications for currently uncurable diseases. These simple, chunky robots are a step toward this goal, he says.

“If we can design algorithms that can design things for us, all bets are off,” Lipson says. “We are going to experience an incredible boost.”