Multi-mobile (M2) Computing System Makes Android and iOS Apps Sharable on Multiple Devices

The M2 system integrates cameras, displays, microphones, speakers, sensors, and GPS to improve audio conferencing, media recording, and Wii-like gaming, and allow greater access for disabled users

Media Contact

Holly Evarts, Director of Strategic Communications and Media Relations

212-854-3206 (o), 347-453-7408 (c), [email protected]

About the Study

The study is titled “Heterogeneous Multi-Mobile Computing.”

Authors are: Naser AlDuaij, Alexander Van’t Hof, and Jason Nieh, Department of Computer Science, Columbia Engineering.

This work was supported in part by a Google Research Award, and NSF grants CNS-1717801 and CNS-1563555.

The authors declare no financial or other conflicts of interest.

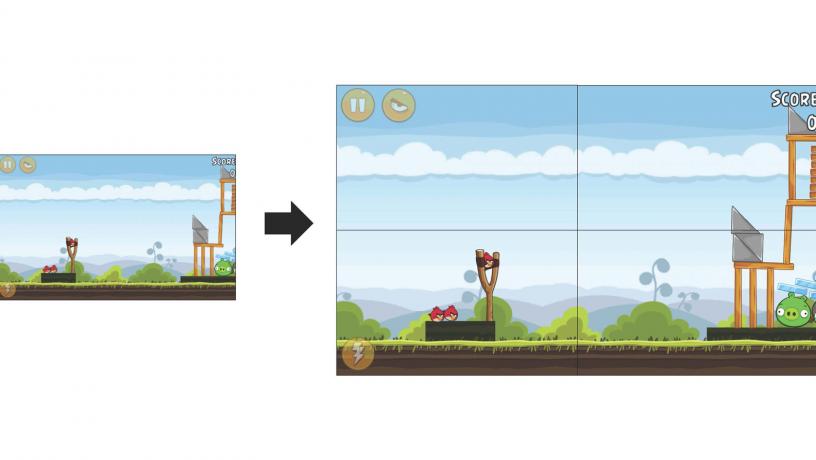

Multi-mobile computing using fused devices

New York, NY—June 20, 2019—Computer scientists at Columbia Engineering have developed a new computing system that enables current, unmodified mobile apps to combine and share multiple devices, including cameras, displays, speakers, microphones, sensors, and GPS, across multiple smartphones and tablets. Called M2, the new system operates across heterogeneous systems, including Android and iOS, combining the functionality of multiple mobile systems into a more powerful one that gives users a seamless experience across the various systems.

With the advent of bezel-less smartphones and tablets, M2 answers the growing demand for multi-mobile computing—users can instead dynamically switch their Netflix or Spotify streams from their smartphones to a collection of other nearby systems for a larger display or better audio. Instead of using smartphones and tablets in isolation, users can combine their system’s functionalities since they now can all work together. Users can even combine photos taken from different cameras and from different angles into a single, detailed 3D image.

“Given the many popular and familiar apps out there, we can combine and mix systems to do cool things with these existing unmodified apps without forcing developers to adopt a new set of APIs and tools,” says Naser AlDuaij, the study’s lead author and a PhD student working with Computer Science Professor Jason Nieh. “We wanted to use M2 to target all apps without adding any overhead to app development. Users can even use M2 to run Android apps from their iPhones.”

The challenge for the team was that mobile systems are not only highly heterogeneous, but that heterogeneous device sharing is also difficult to support. Beyond hardware heterogeneity, there are also many diverse platforms and OS versions, with a wide range of incompatible device interfaces that dictate how software applications communicate with hardware.

While different mobile systems have different APIs and low-level devices are vendor-specific, the high-level device data provided to apps is generally in a standard format. So AlDuaij took a high-level device data approach and designed M2 to import and export device data in a common format to and from systems, avoiding the need to bridge incompatible mobile systems and device APIs. This method enables M2 not only to share devices, but also to mix and combine devices of different types of data since it can aggregate or manipulate device data in a known format.

“With M2, we are introducing device transformation, a framework that enables different devices across disparate systems to be substituted and combined with one another to support multi-mobile heterogeneity, functionality, and transparency,” says AlDuaij, who presented the study today at MobiSys 2019, the 17th ACM International Conference on Mobile Systems, Applications, and Services. “We can easily manipulate or convert device data because it’s in a standard format. For example, we can easily scale and aggregate touchscreen input. We can also convert display frames to camera frames or vice versa. M2 enables us to reinterpret or represent different devices in different ways.”

The M2 system integrates cameras, displays, microphones, speakers, sensors, and GPS to improve audio conferencing, media recording, and Wii-like gaming, and allow greater access for disabled users.

We think that multi-mobile computing offers a broader, richer experience with the ability to combine multiple devices from multiple systems together in new ways.

Among M2’s device “transformations” are fusing device data from multiple devices to provide a multi-headed display scenario for a better “big screen” viewing or gaming experience. By converting accelerometer sensor data to input touches, M2 can transform a smartphone into a Nintendo Wii-like remote to control a game on another system. Eye movements can also be turned into touchscreen input, a useful accessibility feature for disabled users who cannot use their hands.

For audio conferencing without having to use costly specialized equipment, M2 can be deployed on smartphones across a room to leverage their microphones from multiple vantage points, providing superior speaker-identifiable sound quality and noise cancellation. M2 can redirect a display to a camera so that stock camera apps can record a Netflix or YouTube video and can also enable panoramic video recording by fusing the camera inputs from two systems to create a wider sweeping view. One potentially popular application would let parents seated next to each other record their child's wide-angled school or sports performance.

“Doing all this without having to modify apps means that users can continue to use their favorite apps with an enhanced experience,” AlDuaij says. “M2 is a win-win—users don’t need to worry about which apps would support such functionality and developers don’t need to spend time and money to update their apps.”

Using M2 is simple—all a user would have to do is to download the M2 app from Google Play or Apple’s App Store. No other software is needed. One mobile system runs the unmodified app; the input and output from all systems is combined and shared to the app.

“Our M2 system is easy to use, runs efficiently, and scales well, especially compared to existing approaches,” Nieh notes. “We think that multi-mobile computing offers a broader, richer experience with the ability to combine multiple devices from multiple systems together in new ways.”

The Columbia team has started discussions with mobile OS vendors and phone manufacturers to incorporate M2 technologies into the next releases of their products. With a few minor modifications to current systems, mobile OS vendors can make multi-mobile computing broadly available to everyone.

###

Columbia Engineering

Columbia Engineering, based in New York City, is one of the top engineering schools in the U.S. and one of the oldest in the nation. Also known as The Fu Foundation School of Engineering and Applied Science, the School expands knowledge and advances technology through the pioneering research of its more than 220 faculty, while educating undergraduate and graduate students in a collaborative environment to become leaders informed by a firm foundation in engineering. The School’s faculty are at the center of the University’s cross-disciplinary research, contributing to the Data Science Institute, Earth Institute, Zuckerman Mind Brain Behavior Institute, Precision Medicine Initiative, and the Columbia Nano Initiative. Guided by its strategic vision, “Columbia Engineering for Humanity,” the School aims to translate ideas into innovations that foster a sustainable, healthy, secure, connected, and creative humanity.