Robot shows 'empathy' toward another robot in new study

A Columbia Engineering robot has learned to predict its partner robot's future actions, demonstrating what researchers see as a glimmer of primitive empathy.

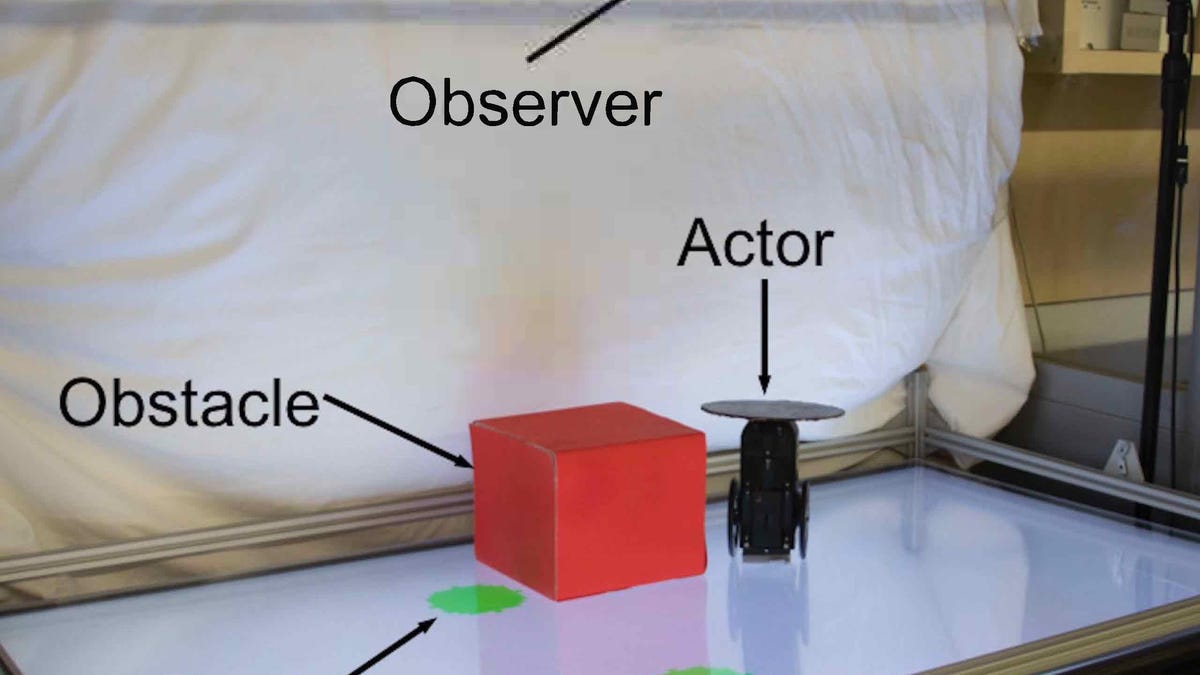

One robot runs around in a playpen trying to catch the visible green food, while another machine learns to predict the first robot's behavior purely through visual observations.

Robots can climb rough terrain, help humans maintain social distance protocols, and even dance like nobody's watching. Now researchers have discovered they might show glimmers of empathy.

A new study from Columbia Engineering, published Monday in Nature Scientific Reports, shows how one robot has learned to predict its partner robot's future actions based on just a few video frames. "Our findings begin to demonstrate how robots can see the world from another robot's perspective," lead study author Boyuan Chen said in a statement.

The researchers placed one robot in a playpen roughly 3x2 feet in size. The researchers programmed the robot to find and move toward any green circle in its view. Sometimes the robot would see a green circle in its camera view and move directly toward it. Other times, the green circle would be blocked from view by a tall red box, so the robot would move toward a different green circle or not move at all.

After observing the other robot move around for two hours, the observing robot could anticipate the other robot's path. The observing robot was eventually able to predict the other robot's path 98 out of 100 times, in varying situations.

"The ability of the observer to put itself in its partner's shoes, so to speak, and understand, without being guided, whether its partner could or could not see the green circle from its vantage point, is perhaps a primitive form of empathy," Chen said.

While the study's researchers expected the observer robot would learn to make predictions about the subject robot's actions, they didn't anticipate how accurately the observer robot could predict the other robot's future moves with only a few seconds of video as a cue.

While the behaviors the robots in this study exhibited are clearly much less sophisticated than humans, researchers believe they could be the beginning of endowing robots with the "Theory of Mind."

"Theory of Mind" is a theory that's foundational for successful social interaction. The theory is that at age 3, humans begin to better understand other humans have different needs and behaviors. This theory is also essential for humans to understand complex social interactions such as cooperation, competition, empathy and deception.

Hod Lipson, a Columbia mechanical engineering professor and co-author of the study, says the team's findings also raise a few ethical questions. While robots are becoming smarter and more useful, there could come a time they learn to anticipate how humans think, and may also learn to manipulate those thoughts.

"We recognize that robots aren't going to remain passive instruction-following machines for long," Lipson said. "Like other forms of advanced AI, we hope that policymakers can help keep this kind of technology in check so that we can all benefit."

The study, which was conducted at Columbia Engineering's Creative Machines Lab, is part of a larger effort to give robots the ability to understand and predict the goals of other robots from visual observations alone.