NSF Funds a Robot Teleoperation System that Could Transform the Manufacturing Workforce

Profs Shuran Song, Steve Feiner, and Matei Ciocarlie are developing a novel platform to enable factory workers to work remotely

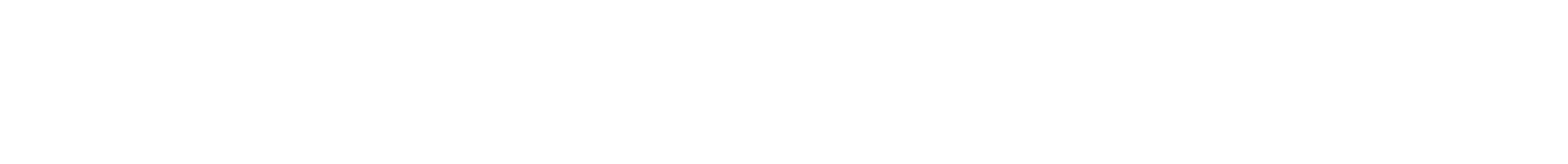

The proposed human-in-the-loop assembly system consists of three major components: (1) A perception system that senses the robot worksite and converts it into a visual physical scene representation, where each object is represented with its 3D model and relevant physical attributes. (2) A 3D VR user interface that allows a human operator to specify the assembly goal by manipulating these virtual 3D models. (3) A goal-driven deep reinforcement learning algorithm that infers an effective low-level planning policy given the assembly goal and the robot hardware configuration. This algorithm is also able to infer its probability of success and use it to determine when to request human assistance.

Remote work has long been a hotly discussed topic and is even more so now, as the COVID pandemic accelerates the adoption of remote working in many industries around the world. But while it has been easy for some businesses to adopt a remote working model, it has been especially difficult for manufacturing and warehouse workers because the necessary infrastructure doesn’t yet exist. Most manufacturing jobs require workers to be physically present in the workplace to perform their job.

Researchers from Columbia Engineering recently won a National Science Foundation (NSF) grant to develop a robot teleoperation system that will allow workers to work remotely. The five-year $3.7M award will support the project, “FMRG: Adaptable and Scalable Robot Teleoperation for Human-in-the-Loop Assembly.”

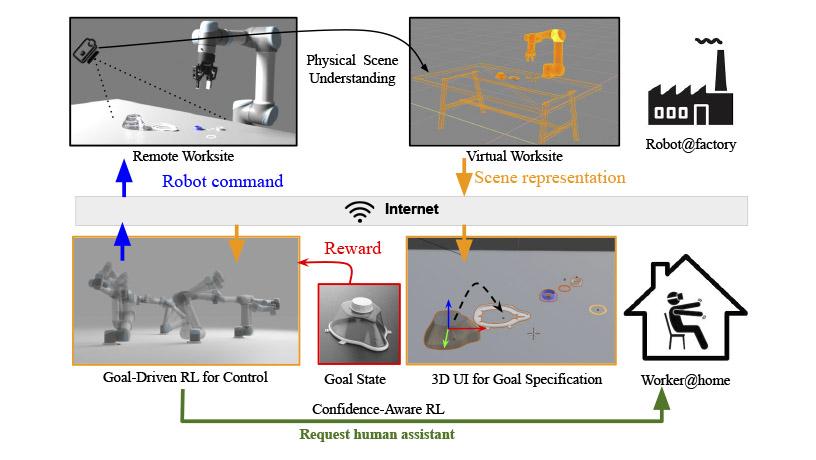

Today, a significant number of manufacturing jobs require different levels of physical labor and interactions including assembly, kitting, and packaging. All these tasks require workers to be physically present in the workplace in order to get the job done. The new NSF-funded project, led by Shuran Song, an assistant professor of computer science, aims to change this by developing an adaptable and scalable robot teleoperation system—a remote operation system paired with robots that enable workers to perform physical labor tasks remotely.

“We envision a system that will allow workers who are not trained roboticists to operate robots,” said Song, who joined the department in 2019. “I am excited to see how this research could eventually provide greater job access to workers regardless of their geographical location or physical ability.”

The proposed system, unlike those that are currently available, will be easy to use for all workers, and will be run over typical internet connections, without the need for expensive and complex devices. Her team is leveraging recent advances in perception, machine learning, and 3D-user-interface design to achieve adaptable and scalable teleoperation.

The researchers have developed a physical-scene-understanding algorithm to convert the visual observations (camera shots) of real-world robot workspace into a virtual manipulable 3D-scene representation. The next step is designing a 3D and virtual reality (VR) user interface that allows workers to specify high-level task goals using the scene representation. The final step in the platform is to create a goal-driven reinforcement learning algorithm that infers an effective planning policy, given the task goals and the robot configuration. In sum, the system enables the factory worker to specify task goals without having an expert knowledge of the robot hardware and configuration.

There are three principal investigators on this multidisciplinary project. Song is directing the vision-based perception and machine learning algorithm designs for the physical-scene-understanding algorithms. Computer Science Professor Steven Feiner is focusing on developing the 3D and VR user interface, and Matei Ciocarlie, associate professor of mechanical engineering, is building the robot learning and control algorithms. Once the modules are built, the PIs will integrate them into the final system.

“Our team will be performing research that cuts across the fields of machine perception, human-computer interaction, human-robot interaction, and machine learning to make possible remote work at a far higher level than previous research on teleoperation,” said Steven Feiner. He and his students from the Computer Graphics and User Interfaces Lab will design, implement, and evaluate an experimental 3D and VR user interface that will allow remote workers to specify high-level task goals for assembly line robots. The user interface will do this in a way that does not require workers to understand specific real-world robot configurations and how to control them. If things go wrong on the factory floor and the worker is faced with a problem, the user interface will support getting help from workers with the necessary lower-level knowledge needed to troubleshoot and make things right.

“Enabling non-roboticists to teleoperate complex robots for difficult manipulation tasks has been a long-standing interest of mine,” said Matei Ciocarlie, whose Robotic Manipulation and Mobility Lab has, in past projects, focused primarily on situations where the person and the robot are working side-by-side, collaborating on the same task. “For this project, we are working on a much more challenging problem: the human is remote, seeing the scene through the robot’s eyes, and potentially trying to supervise many robots at the same time.”

The new project is aimed at making it possible for people to work from home on assembly-line tasks that currently have to be done onsite. There are many advantages to achieving this goal. Moving jobs off the factory floor is likely to improve safety. Eliminating the work commute not only frees up time and costs but also increases the number of jobs available to workers who would no longer need to be within distance of a physical commute. Avoiding the need for the worker to perform physically difficult tasks could make it possible for workers with disabilities or who are older to perform work that they would not currently be able to do. There are also obvious applications to situations, such as the current pandemic, in which working in-person can be dangerous.

The research team is collaborating with colleagues at Teachers College to help design and run user studies to evaluate the VR user interface, and with LaGuardia Community College and NYDesigns to translate research results to industrial partners and develop training programs to educate and prepare the future manufacturing workforce.

“This research investigates how we can use advanced robotics technologies to enhance human capabilities,” said Song. “And it has the potential to change how remote work is done.”